Editor’s note: This post is a spiritual successor to Technical Scalability Creates Social Scalability. Click here to read it.

Over the past two years, the scaling debate has narrowed and fixated on the central question of modularity vs integration.

(Note that discourse in crypto often conflates “monolithic” and “integrated” systems. There is a rich history of debate in technology over the last 40 years about integrated vs. modular systems at every layer of the stack. The crypto incarnation of this dialogue should be framed through the same lens; this is far from a new debate).

In thinking through modularity vs. integration, the most important design decision a chain can make is how much complexity to expose up the stack to application developers. The customers of blockchains are application developers, thus design decisions should ultimately be considered for them.

Today, modularity is largely hailed as the primary way that blockchains will scale. In this post, I will question that assumption from first principles, surface the cultural myths and hidden costs of modular systems, and share the conclusions I’ve developed over the past six years of contemplating this debate.

Modular Systems Increase Developer Complexity

By far the largest hidden cost of modular systems is developer complexity.

Modular systems substantially increase complexity that application developers must manage, both in the context of their own applications (technical complexity), and in the context of interfacing with other applications and pieces of state (social complexity).

In the context of crypto systems, the modular blockchains we see today allow for theoretically more specialization but at the expense of creating new complexity. This complexity—both technical and social in nature—is being passed up the stack to application developers, which ultimately makes it more difficult to build.

For example, consider the OP stack, which seems to be the leading modular framework as of August 2023. The OP stack forces developers to opt into a Law of Chains (which come with a lot of social complexity, as suggested by the name), or to fork away and manage the OP stack on a stand alone basis. Both options create huge amounts of downstream complexity for builders. If you fork off and go your own route, are you going to receive technical support from other ecosystem players (CEXs, fiat on-ramps, etc.) that have to incur costs to conform to a new technical standard? If you opt into the Law of Chains, what rules and constraints are you placing on yourself today and, even more importantly, tomorrow?

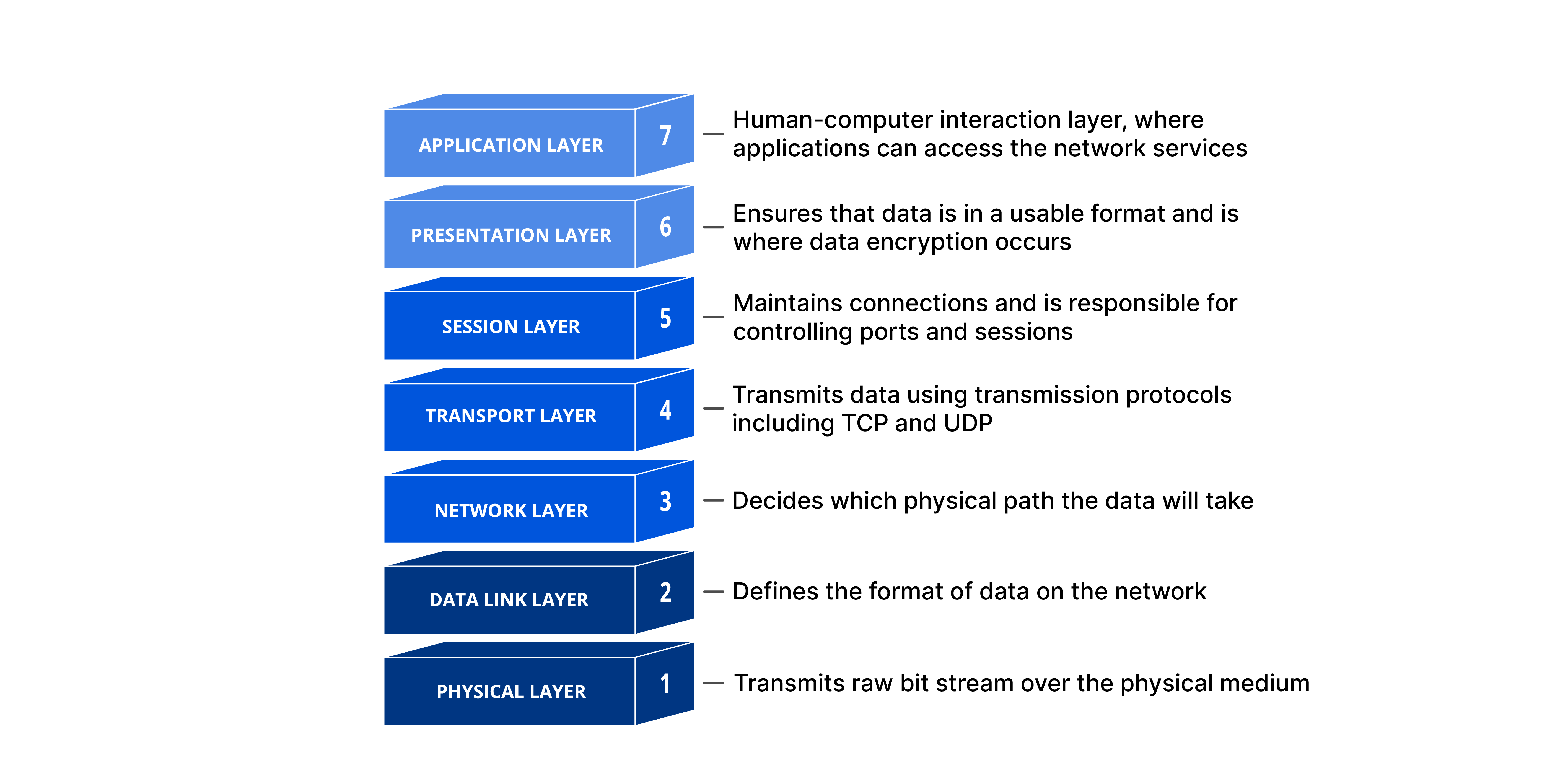

Source: The OSI Model

Source: The OSI Model

Modern operating systems (OSes) are large and complex systems comprising hundreds of subsystems. Modern OSes handle layers 2-6 in the image above. That is the quintessential example of integrating modular components to manage the complexity that is exposed up the stack to application developers. Application developers do not want to deal with anything below layer 7, and that is precisely why OSes exist: OSes manage the complexity of the layers below so that application developers don’t have to. Therefore, modularity in and of itself should not be the goal but rather a means to an end.

Every major software system in the world today—cloud backends, OSes, database engines, game engines, etc.—are highly integrated and simultaneously composed of many modular subsystems. Software systems tend to integrate overtime to maximize performance and minimize developer complexity. Blockchains won’t be different.

(As an aside, the primary breakthrough of Ethereum was reducing complexity that emerged from the era of Bitcoin forks in 2011-2014. Modular proponents frequently highlight the Open Systems Interconnection (OSI) model to argue that data availability (DA) and execution should be separated; however, this argument is widely misunderstood. A correct first-order understanding of the issues at hand leads to the opposite conclusion: using OSI as an analog is an argument for integrated systems rather than modular ones.)

Modular Chains Don’t Execute Code Faster

By design, the common definition of “modular chains” is the separation of data availability (DA) and execution: one set of nodes performs DA, while another set (or sets) performs execution. The node sets don’t have to have any overlap at all, but they can.

In practice, separating DA and execution doesn’t inherently improve the performance of either; at the end of the day, some piece of hardware somewhere in the world has to perform DA, and some piece of hardware somewhere has to perform execution. Separating those functions does not increase the performance of either. Separation can, however, reduce the cost of compute, but only by centralizing execution.

Again, this is worth reiterating: regardless of modular vs. integrated architecture, some piece of hardware somewhere has to do the work, and pushing DA and execution to separate pieces of hardware doesn’t intrinsically accelerate either or increase total system capacity.

Some argue that modularity allows for the proliferation of many EVMs to run in parallel as roll ups, which enables execution to scale horizontally. While this is theoretically correct, this comment actually highlights the constraints of the EVM as a single-threaded processor rather than addressing the fundamental premise of separating DA and execution in the context of scaling total system throughput.

Modularity alone doesn’t increase throughput.

Modularity Increases Transaction Costs For Users

By definition, each L1 and L2 is a distinct asset ledger with its own state. Those separate pieces of state can communicate, albeit with more latency and with more developer- and user-complexity (i.e., via bridges, such as LayerZero and Wormhole).

The more asset ledgers there are, the more the global state of all accounts fragments. This is unilaterally terrible for chains and users across many fronts. Fragmented state results in

- Less liquidity and therefore higher spreads for takers

- More total gas consumption (since a cross-chain transaction by definition requires at least two transactions on at least two asset ledgers).

- More duplicative computations across asset ledgers (thereby reducing total system throughput): when the price of ETH-USDC moves on Binance or Coinbase, arbitrage opportunities become available on every ETH-USDC pool across all asset ledgers. (You can easily imagine a world in which there are 10+ transactions across various asset ledgers whenever ETH-USDC price moves on Binance or Coinbase. Keeping prices in line as a result of fragmented state is an extremely inefficient use of blockspace.)

It is important to recognize that creating more asset ledgers explicitly compounds costs along all of these dimensions, especially as it pertains to DeFi.

The primary input to DeFi is on-chain state (aka who owns which assets). As teams launch app chains/roll ups, they will naturally fragment state, which is strictly bad for DeFi, both in terms of managing complexity for application developers (bridges, wallets, latency, cross-chain MEV, etc.) and users (wider spreads, longer settlement times).

DeFi works best when assets are issued on a single asset ledger and trading occurs within a single state machine. The more asset ledgers, the more complexity application developers must manage, and the more costs users must bear.

App Roll Ups Do Not Create New Monetization Opportunities For Developers

Proponents of app chains/rollups argue that incentives will lead app developers to build roll ups rather than on an L1 or L2 so that they can capture MEV back to their own tokens. However, this thinking is flawed because running an app roll-up is not the only way to capture MEV back to an application-layer token, and, in most cases, not the optimal way. Application layer tokens can capture MEV back to their own tokens simply by encoding logic in smart contracts on a general-purpose chain. Let’s consider a few examples:

- Liquidations — If the Compound or Aave DAOs want to capture some part of the MEV that goes to liquidation bots, they can just update their respective contracts to pay out some percent of the fee that’s currently going to the liquidator to route to the DAO. A new chain/roll up is not necessary.

- Oracles — Oracle tokens can capture MEV by offering back-running-as-a-service. In addition to a price update, an oracle can bundle any arbitrary on-chain transaction that is guaranteed to run immediately after the price update. As such, oracles can capture MEV by offering backrunning-as-a-service to searchers, block builders, etc.

- NFT mints — NFT mints are rife with scalping bots. This can easily be mitigated by simply encoding a declining profit re-share. For example, if someone tries to resell their NFT within two weeks of an NFT mint, 100% of the revenue can be re-captured back to the mint creator or DAO. The percentage can vary overtime.

There is no universal answer to capturing MEV to an application-layer token. However, with a little bit of thinking, app developers can easily capture MEV back to their own tokens on general-purpose chains. Launching an entirely new chain is simply unnecessary, creates additional technical and social complexity for developers to manage, and creates more wallet and liquidity challenges for users.

App Roll Ups Do Not Address Cross-App Congestion

Many have argued that appchains / roll ups ensure that a given app isn’t impacted by a gas spike caused by other on-chain activity, such as a popular NFT mint. This view is partially right, but mostly wrong.

The reason this has been a problem historically is primarily a function of the single-threaded nature of EVM, rather than because of lack of separation of DA and execution. All L2’s pay fees to the L1, and L1 fees can increase at any time. During the meme coin frenzy earlier this year, trading fees on Arbitrum and Optimism exceeded $10. More recently, fees on Optimism spiked in the wake of the Worldcoin launch.

The only solution to fee spikes is to both: 1) maximize L1 DA, and 2) make fee markets as granular as possible:

If the L1’s resources are constrained, usage spikes in various L2s will trickle down to the L1, which will impose higher costs on all other L2s. Therefore, appchain / roll ups are not immune to gas spikes.

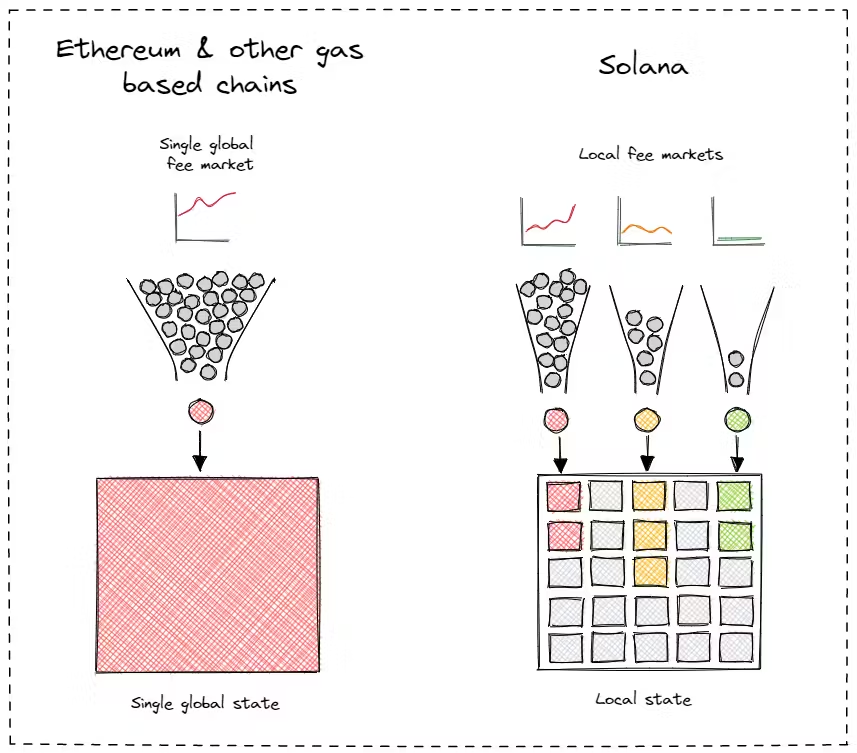

The co-existence of many EVM L2s is just a crude way to try to localize fee markets. It is better than putting everything in a single EVM L1, but does not address the core problem from first principles. When you recognize that the solution is to localize fee markets, the logical endpoint is fee markets per piece of state (as opposed to fee markets per L2).

Other chains have already come to this conclusion. Both Solana and Aptos naturally localize fee markets. This required a ton of engineering work over many years for their respective execution environments. Most modular proponents severely underweight the importance and difficulty of solving the hard engineering problems that make hyper-local fee markets possible.

Source: https://blog.labeleven.dev/why-solana

Source: https://blog.labeleven.dev/why-solana

By launching many asset ledgers, developers are naturally increasing technical and social complexity without unlocking real performance gains, even during times when other applications are driving heightened volume.

Flexibility Is Overrated

Modular chain proponents argue that modular architectures are more flexible. This statement is obviously true. But it’s not clear that it matters.

For six years I have been trying to find application developers who need meaningful flexibility that general purpose L1’s cannot provide. But so far, outside of three very specific use cases, there hasn’t been a clear articulation as to why flexibility is important, nor how it directly helps with scaling. The three specific use cases I’ve identified in which flexibility is important are:

- Apps that leverage “hot” state. Hot state is state that is necessary for real-time coordination of some set of actions, but is ultimately not committed permanently on-chain. A few examples of hot state:

- Limit orders in a DEX, such as dYdX and Sei (many limit orders are ultimately canceled).

- Real time coordination and recognition of delivery of order flow in dFlow (dFlow is a protocol to facilitate a decentralized order flow marketplace between market makers and wallets).

- Oracles such as Pyth, which is a low-latency oracle. Pyth is live as a standalone SVM chain. Pyth produces so much data, that the core Pyth team decided it would be better to send high frequency price updates to a stand-alone chain, and then bridge prices over to other chains as needed using Wormhole.

- Chains that modify consensus. The best examples of this are Osmosis (in which all transactions are encrypted before being sent to the validators), and Thorchain (which prioritizes transactions within a block based on fees paid).

- Infrastructure that needs to leverage threshold signature schemes (TSS) in some way. Some examples of this are Sommelier, Thorchain, Osmosis, Wormhole, and Web3Auth.

All of the examples listed above, except for Pyth and Wormhole, are built using the Cosmos SDK, and are running as stand alone chains. This speaks volumes about the quality and extensibility of the Cosmos SDK for all three use cases: hot state, consensus-modification, and threshold signature scheme (TSS) systems.

However, most of the items identified in the three sections above are not apps. They are infrastructure.

Pyth and dFlow are not apps; they are infrastructure. Sommelier (the chain, not the yield-optimizer front end), Wormhole, Sei, and Web3Auth are not apps; they are infrastructure. Of those that are user-facing apps, they are all one specific type: a DEX (dYdX, Osmosis, Thorchain).

I have been asking Cosmos and Polkadot proponents for six years about the use cases that become unlocked from the flexibility they provide. I think there is enough data to infer a few things:

First, the infrastructure examples should not exist as roll ups because they either produce too much low-value data (e.g., hot state, and the whole point of hot state is that the data is not committed back to the L1), or because they perform some function that is intentionally orthogonal to state updates on an asset ledger (e.g., all the TSS use cases).

Second, the only type of app that I’ve seen meaningfully change core system design is a DEX. This makes sense because DEXs are rife with MEV, and because general-purpose chains by definition cannot match the latency of CEXs. Consensus is fundamental to trade execution quality and MEV, and so naturally there is a lot of opportunity for innovation in DEXs based on making changes to consensus. However, as noted earlier in this essay, the primary input into a spot DEX is the assets being traded. DEXs compete for assets, and therefore for asset issuers. In this framing, stand-alone DEX chains are unlikely to succeed, because the primary variable that asset issuers think about at the time of asset issuance is not DEX-related MEV, but general purpose smart contract functionality and the incorporation of that functionality into the app developer’s respective app.

However, this framing of DEXs competing for asset issuers is mostly irrelevant for derivatives DEXs, which primarily rely on USDC collateral and oracle price feeds, and also which inherently must lock user assets to collateralize derivatives positions. As such, to the extent that standalone DEX chains make sense, they are most likely to work for derivatives-focused DEXs such as dYdX and Sei.

(Note: If you are building a new kind of infrastructure that isn’t captured by the categories above, or a consumer-facing app that genuinely requires more flexibility than what general-purpose, integrated L1s can support, please reach out! It has taken six years to distill the above, and I’m sure this list is incomplete.)

Conversely, let’s consider the apps that exist today across general-purpose, integrated L1s. Some examples: Games; Audius; DeSoc systems such as Farcaster and Lens; DePIN protocols such as Helium, Hivemapper, Render Network, DIMO, and Daylight; Sound, NFT exchanges, and many more. None of these particularly benefit from the flexibility that comes with modifying consensus. They all have a fairly simple, obvious, and common set of requirements from their respective asset ledgers: low fees, low latency, access to spot DEXs, access to stablecoins, and access to fiat-on ramps such as CEXs.

I believe we have enough data now to say with some degree of confidence that the vast majority of user-facing applications have the same common set of requirements as enumerated in the prior paragraph. While some applications can optimize for other variables on the margin with customizations down the stack, the trade-offs that come with those customizations are generally not worth it (more bridging, less wallet support, fewer index/query provider support, reduced direct fiat on ramps, etc.).

Launching new asset ledgers is one way to achieve flexibility, but it rarely adds value, and it almost always creates technical and social complexity with minimal ultimate gains for application developers.

Restaking Is Not Necessary To Scale DA

You’ll also hear modular proponents talk about restaking in the context of scaling. This is the most speculative argument that modular-chain proponents make, but is worth addressing.

It states roughly that because of restaking (e.g., via systems like EigenLayer), the crypto ecosystem as a whole can restake ETH an infinite number of times to power an infinite number of DA layers (e.g., EigenDA) and execution layers. Therefore, scalability is solved in all respects while ensuring value accrual to ETH.

Although there is a tremendous amount of uncertainty between the status quo and that theoretical future, let’s take it for granted that all of the layered assumptions work as advertised.

Ethereum’s DA today is roughly ~83 KB/s. With EIP 4844 later this year, that roughly doubles to ~166 KB/s. EigenDA adds an additional 10 MB/s, though with a different set of security assumptions (not all ETH will be restaked to EigenDA).

By contrast, Solana today offers a DA of roughly 125 MB/s (32,000 shreds per block, 1,280 bytes per shred, 2.5 blocks per second). Solana is so much more efficient than Ethereum and EigenDA because of its Turbine block propagation protocol, which has been in production for 3 years. Moreover, Solana’s DA scales over time with Nielsen’s Law, which continues unabated (unlike Moore’s Law, which for practical purposes died for single-threaded computation a decade ago).

There are a lot of ways to scale DA with restaking and modularity, but these mechanisms are simply unnecessary today and introduce significant technical and social complexity.

Build For Application Developers

After contemplating this for years, I’ve arrived at the conclusion that modularity should not be a goal in and of itself.

Blockchains must serve their customers—i.e., application developers—and as such, blockchains should abstract infrastructure-level complexity so that entrepreneurs can focus on building world-class applications.

Modular building blocks are great. But the key to building winning technologies is to figure out which pieces of the stack to integrate, and which pieces to leave for others. And as it stands now, chains that integrate DA and execution inherently offer simpler end-user and developer experiences, and will ultimately provide a better substrate for best-in-class applications.

Thanks to Alana Levin, Tarun Chitra, Karthik Senthil, Mert Mumtaz, Ceteris, Jon Charbonneau, John Robert Reed, and Ani Pai for providing feedback on this post.

Disclosure: Unless otherwise indicated, the views expressed in this post are solely those of the author(s) in their individual capacity and are not the views of Multicoin Capital Management, LLC or its affiliates (together with its affiliates, “Multicoin”). Certain information contained herein may have been obtained from third-party sources, including from portfolio companies of funds managed by Multicoin. Multicoin believes that the information provided is reliable and makes no representations about the enduring accuracy of the information or its appropriateness for a given situation. This post may contain links to third-party websites (“External Websites”). The existence of any such link does not constitute an endorsement of such websites, the content of the websites, or the operators of the websites.These links are provided solely as a convenience to you and not as an endorsement by us of the content on such External Websites. The content of such External Websites is developed and provided by others and Multicoin takes no responsibility for any content therein. Charts and graphs provided within are for informational purposes solely and should not be relied upon when making any investment decision. Any projections, estimates, forecasts, targets, prospects, and/or opinions expressed in this blog are subject to change without notice and may differ or be contrary to opinions expressed by others.

The content is provided for informational purposes only, and should not be relied upon as the basis for an investment decision, and is not, and should not be assumed to be, complete. The contents herein are not to be construed as legal, business, or tax advice. You should consult your own advisors for those matters. References to any securities or digital assets are for illustrative purposes only, and do not constitute an investment recommendation or offer to provide investment advisory services. Any investments or portfolio companies mentioned, referred to, or described are not representative of all investments in vehicles managed by Multicoin, and there can be no assurance that the investments will be profitable or that other investments made in the future will have similar characteristics or results. A list of investments made by venture funds managed by Multicoin is available here: https://multicoin.capital/portfolio/. Excluded from this list are investments that have not yet been announced due to coordination with the development team(s) or issuer(s) on the timing and nature of public disclosure. Separately, for strategic reasons, Multicoin Capital’s hedge fund does not disclose positions in publicly traded digital assets.

This blog does not constitute investment advice or an offer to sell or a solicitation of an offer to purchase any limited partner interests in any investment vehicle managed by Multicoin. An offer or solicitation of an investment in any Multicoin investment vehicle will only be made pursuant to an offering memorandum, limited partnership agreement and subscription documents, and only the information in such documents should be relied upon when making a decision to invest.

Past performance does not guarantee future results. There can be no guarantee that any Multicoin investment vehicle’s investment objectives will be achieved, and the investment results may vary substantially from year to year or even from month to month. As a result, an investor could lose all or a substantial amount of its investment. Investments or products referenced in this blog may not be suitable for you or any other party. Valuations provided are based upon detailed assumptions at the time they are included in the post and such assumptions may no longer be relevant after the date of the post. Our target price or valuation and any base or bull-case scenarios which are relied upon to arrive at that target price or valuation may not be achieved.

Multicoin has established, maintains and enforces written policies and procedures reasonably designed to identify and effectively manage conflicts of interest related to its investment activities. For more important disclosures, please see the Disclosures and Terms of Use available at https://multicoin.capital/disclosures and https://multicoin.capital/terms.